The central argument of Cut Through! is that our information environment has changed more quickly than our communications practice.

In this series I have argued that our information environment has become more “D-FACC”:

Democratised

Fragmented

Abundant

Corroded

Concentrated

In Part 1, I looked at how Google Search swept away traditional information gatekeepers in the 1990s. In Part 2, I looked at the way social media and the invention of the iPhone created an infinite scroll of information.

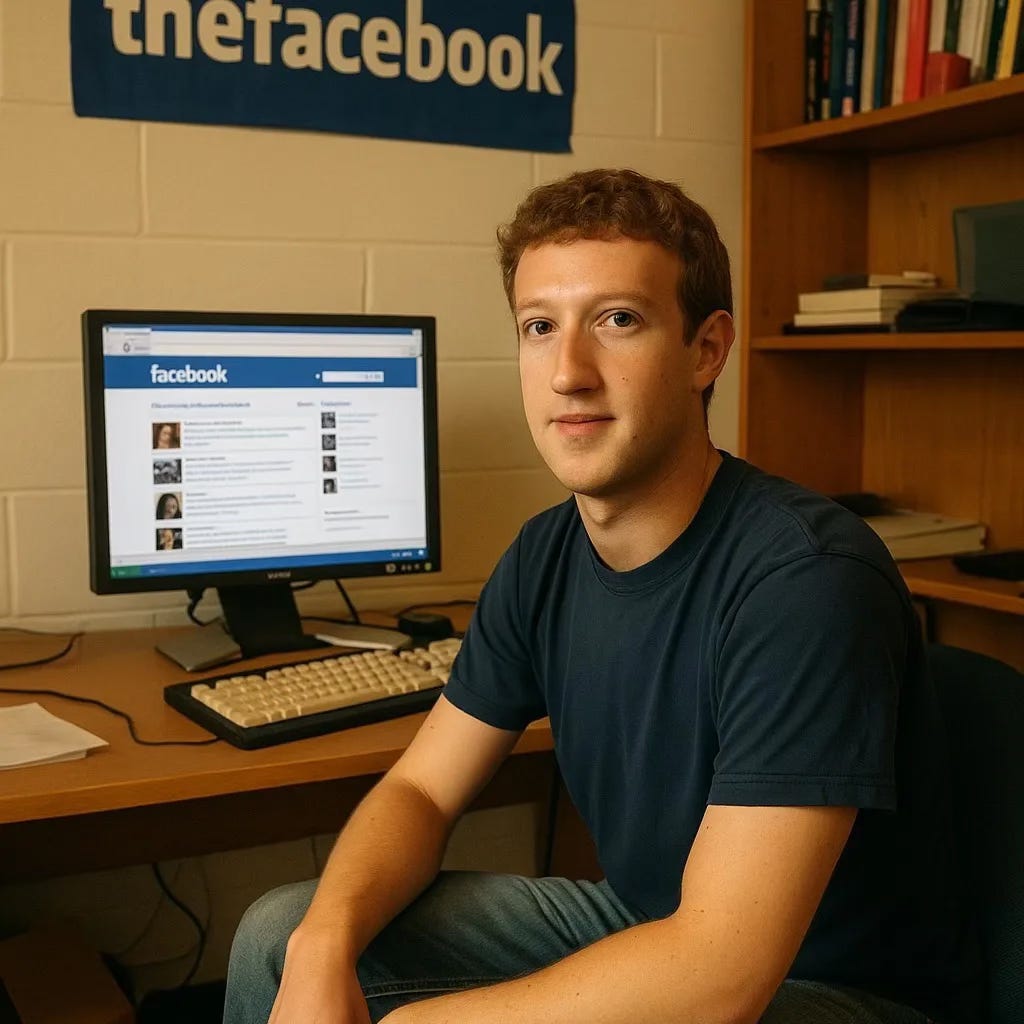

But I said that I would come back to Facebook in Part 3, because what really mattered were the changes that Facebook made in the 2010s.

They were hardly noticed by users at the time, but they would fundamentally alter how we consume information, perhaps even how we perceive reality.

Part 3: The age of the algorithm.

Up until 2009, posts in your Facebook News Feed appeared in reverse chronological order, newest to oldest. You saw what your friends posted, when they posted it.

But then, for the first time, Facebook began to curate your News Feed. They used a machine-learning algorithm that watched what you were more likely to like, share or comment on.

It was designed to show you “more relevant” content.

What it actually did was herald the end of a shared online reality.

Over time every major platform followed suit. Twitter introduced algorithmic timelines. YouTube began recommending videos not by topic but by your engagement history. TikTok, Reddit, LinkedIn all moved to AI driven feeds that were designed to keep you hooked.

The promise was of personalisation. You would see more of what you liked, and less of what you didn’t. Who could argue with that?

But the consequence was fragmentation. Because your interests are different to mine, your version of the internet became different to mine too.

Instead of everyone seeing the same headlines and messages, people were pulled into increasingly small bubbles of content shaped by their behaviour and peer groups. Two people could be interested in the same public figure and be shown entirely different posts about them.

The world stopped being a stage and became a set of parallel realities.

The choice we made

The algorithms running our timelines were optimised to keep people scrolling and ad revenue rolling. And the content that maximised engagement wasn’t necessarily that which was conducive to a healthy public debate. Outrage, fear and tribalism reliably outperformed sober reporting.

We shouldn’t look at the old media environment through rose-tinted glasses. As Tim Wu observes in his book The Attention Merchants, the commoditisation of news is nothing new. Each new medium had scaled the same bargain: content in return for your ears or eyeballs. And anyone who’s read a biography of Lord Northcliffe, Robert Maxwell or Rupert Murdoch won’t be naïve about the motivations of 20th century media moguls.

However, in the old system, news had been seen as a public good - essential for a functioning democracy and worthy of protection.

The BBC’s first Director-General, Lord Reith, understood that radio was an immensely powerful new technology that could be used for good or ill. He realised that broadcasting could change people and society and that, in the right hands, it could do so for the better. He determined that the BBC should do more than simply give the public what they want and directed that the national broadcaster should “inform, educate and entertain.”

But our global information ecosystem was now being built on the founding principles of Silicon Valley not Lord Reith.

That meant US interpretations of free speech, privacy and corporate autonomy. Like all empires, the big tech companies assumed that their values were as universal and superior as their technology.

Lord Reith may seem hopelessly paternalistic and condescending today, but I wonder whether an algorithm tasked with generating clicks for ad revenue is morally superior to one tasked with creating a more informed and educated society.

Social media algorithms have been optimised for engagement, not quality or truth. And the corrosion of our information environment is not so much a bug as a predictable byproduct of the choices we have made.

By late 2018 this trend reached its apex with the launch of TikTok’s new algorithm. It was qualitatively different from previous algorithms in that it paid no attention whatsoever to who you were, or the following you had. It simply looked at whether an individual piece of content drove certain micro-behaviours - such as a momentary pause or swipe.

It was the ultimate democratisation. To be visible on TikTok you didn’t need authority. You didn’t need a network. You just needed to hold someone’s attention for a few seconds.

Anyone could become a social media star. And now we were getting democracy good and hard. The old media gatekeepers had generally excluded those who had no evidence for the views they held. Now, the eminent scientific researcher and the conspiracy theorist compete as equals before social media’s algorithm.

Its job is brutal triage. If your content survives the first two seconds, it lives.

Everything else dies in the darkness.

The network effect and the privatisation of the public square

Back in 2010, it had still seemed as though social media might be a new and enlightened public square. It seems hopelessly naïve now, but people genuinely believed that social media would provide a platform for civic engagement. Facebook’s early mission was “to make the world more open and connected”. It would be like a modern day version of the agora of Ancient Greece.

The Arab Spring in 2011 demonstrated dramatically that gatekeepers of information were powerless to prevent individuals getting their content in front of vast numbers of people instantly. Anyone with an internet connection could create, distribute and shape a story.

But what we had failed to grasp was the power that would flow from those network effects. The more people join a network the more valuable those networks become for each individual. But this also means that powerful networks have very high switching costs.

Once your friends, family and followers are all on the same platform then leaving risks social ostracisation. Unless everyone leaves at once you can’t easily create an alternative platform.

Social media had given everyone a voice, but the rules of public debate were now in the hands of a few extremely powerful individuals. And the investors who had been funding social media’s expansion decided they were less interested in being Pericles than in being John D Rockefeller.

As the platforms grew, their algorithms started to throttle organic reach and replace it with the content they wanted you to see.

They had used your content to build their network but now pages that had built audiences of millions found that only a small fraction were seeing their posts - unless they paid.

This matters because in a 2025 anti-trust case, Meta argued that it couldn’t be a social media monopoly, because… it’s wasn't actually a social media company at all! Why? Because today, only 17% of the time people spend on Facebook involves them seeing content from friends or family. On Instagram it is just 7%.

The public square has been privatised. What began as a democratic revolution is now more accurately thought of as an advertising marketplace. Social media companies have become entertainment streaming companies.

For communicators, this means you must now buy the attention of the very audiences you helped build.

The consequences for communicators

Three lessons stand out for communicators today from this age of the algorithm.

1. You can’t communicate to “the public” anymore

There is no longer a single public square. There are hundreds of overlapping, self-curated micro-communities. Each has its own language, influencers and norms.

The old broadcast model of one message for everyone has collapsed. Communicators must now design for fragmentation: different formats for different audiences, delivered on the platforms they use by the people they trust.

That doesn’t mean pandering to echo chambers. It means understanding the digital ecosystems your audiences inhabit and tailoring tone, timing and messenger to fit.

2. You are competing for emotion, not just attention

Algorithms reward engagement, and engagement is driven by emotion or utility. The stories that spread are the ones that make people feel something - anger, joy, fear, pride, belonging.

Facts alone rarely travel far. But emotion without substance erodes trust. The challenge for communicators is to combine emotional punch with factual integrity: to frame truth in ways that resonate without distorting it.

That means moving beyond the idea of “raising awareness” to provoking response: writing, visualising and sequencing messages so they activate a feeling, a thought and an action.

If you can’t trigger a feeling, you won’t win the click. But if you can’t hold credibility, you won’t win the argument.

3. You don’t own your audience, you rent it

The audiences you spent years building on your social platforms are not yours. They belong to the platform. Algorithms decide who sees your content, when and how often.

Too many of us have focussed for too long on follower numbers. And these are an illusion of reach. Your following does not matter. What matters for reach is visibility, and visibility is determined by opaque systems increasingly optimised for engagement (not your authority, network, or public value). The danger for every communicator is that any algorithm can be tweaked overnight so that your followers no longer see your content.

That means communicators must diversify their channels and reclaim direct relationships through email lists, podcasts, events, owned media and partnerships. Renting reach from platforms is unavoidable, but relying on Elon Musk or Mark Zuckerberg is a strategic vulnerability. You need a portfolio, not a platform.

The age of the algorithm is where D-FACC hardens into the world we recognise today. Algorithms deepen Fragmentation (creating parallel realities), accelerate Corrosion (engagement outcompetes truth), and drive Concentration (visibility is controlled by a few platforms). Democratised publishing remains, but the rules of reach sit with the algorithm, not the author.

That’s all for now. Next time:

Thanks for reading and please subscribe or share if you enjoy my writing.

Simon

Deep Cuts:

Wu, T. (2017) The Attention Merchants: The epic struggle to get inside our heads.

Williams, D. (2025) Is Social Media Destroying Democracy - or giving it to us good and hard?